A Primer on Approaches to Software Isolation

On the approaches, the differences between them, use cases and future possibilities

Introduction

The demand for portability in contemporary software development has grown over time due to shifting business requirements, quick market responses, and ever-increasing server processing power. These new dynamics bring up concerns about orchestrating software to run on several platforms and serve a greater range of users while preserving cost-effectiveness and developer velocity. Software isolation—running software as an isolated unit within a host system is a response to the above concerns and has changed the dynamics of how software is developed and deployed. Software can now be bundled with its dependencies into an isolated unit that can run anywhere. Multiple such bundles can run on the same host machine without interference. This truly achieves portability, allowing teams to:

quickly deploy software on different platforms without worrying about compatibility,

quickly create new instances of the software to address request surges,

and run multiple software on the same machine thereby achieving cost-saving on computing.

The use of virtual machines and containers are two approaches to software isolation. Both approaches are closely related but should not be confused with each other—something we are to keep in mind even as we explore the different approaches and their use cases. Let’s take a ride, shall we?

Virtual Machines as Game Changers

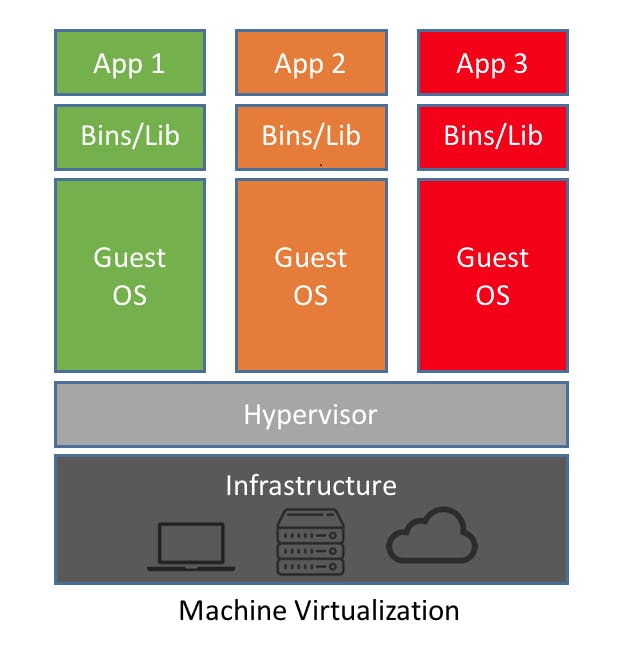

Historically, as computing power increased, running a single application alone on a server became less practical and cost-effective. Multi-applications needed to be accommodated on the same server without causing interference. Virtual Machines (VMs) were born to fill this gap. A VM is designed to run as a self-contained unit on a host machine. As the name implies, a VM is a software emulation of a computer that contains its own operating system (often referred to as a guest OS), applications, binaries, libraries, as well as virtual hardware (virtual CPU, memory, storage, and network interfaces)—pretty much everything that is required to run as an isolated unit.

VMs proved to be game changers in ensuring software portability and isolation. A Linux VM can now run on a Windows operating system alongside other VMs. This is because VMs contain their own environment and draw the resources that they require for their operation from their host machines through a software called a hypervisor otherwise known as the Virtual Machine Monitor (VMM).

The hypervisor provides a platform for managing and executing the guest operating system inside the virtual machine. It is able to map the physical hardware of the host machine to the various VMs running on it.

Image source: pawseysc

There are two kinds of Hypervisors, namely:

Bare-metal Hypervisors: Also referred to as Type-1 hypervisors are installed directly on the hardware of the host machine. Bare-metal hypervisors interact with the underlying hardware of their host machine. They draw resources that are required to power the VMs running on them by interacting directly with their host machines. This result is better performance and scalability. The drawback of bare metal hypervisors, however, is that they are machine dependent. Examples of bare-metal hypervisors are VMware ESXi, Microsoft Hyper-V, and KVM.

Hosted Hypervisors- Also referred to as Type-2 hypervisors are installed on the operating system of the host machine. The host machine is responsible for the hardware required for virtualization, the hypervisor interacts with the underlying hardware through the host operating system which results in operational overhead that reduces the performance of VMs. However, the benefit of hosted hypervisors is that they are not machine dependent. Examples of hosted hypervisors include Oracle VirtualBox, VMware Workstation, and Parallels Desktop.

Hypervisors help achieve process isolation and portability by ensuring that each VM on the host has a predictable and fair share of the computing resources that it requires to run as a self-contained unit. With the introduction of VMs, cost savings were realized by consolidating multiple applications onto one host, speeding up server provisioning, and managing disasters more easily since replicas of VMs can be easily generated, stored, and shared. But as with every other tool, VMs have their drawbacks: each runs its own operating system, resulting in storage and memory overhead. In addition, virtualization technologies and configurations differ between environments, which can make it difficult to share virtual machines. VMs provide portability and isolation, but can these overheads be eliminated?

Now enter containers...

Containers- a step ahead?

Containerization, which started as an incremental shift in how code is packaged and shipped, has fundamentally altered how code is written, leading to more modular services. Containerization has been around for a long time, having been introduced by Linux Containers (LXD) in the early 2000s. But, since the introduction of Docker in 2013, companies have turned to containerization to improve the way their software is packaged, deployed, and delivered, thus giving rise to what is regarded as the container boom. But what are containers, and how are they an incremental shift in the way software is developed, packaged, and deployed?

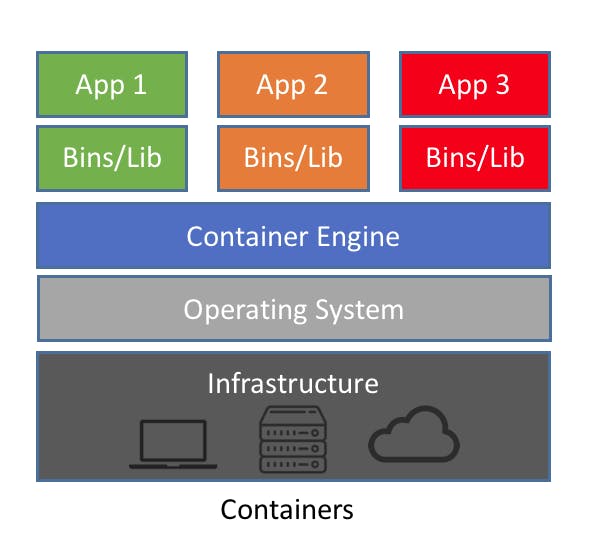

In simple terms, a container is a bundle of everything that an application needs to run (code, config files, libraries, and dependencies) into a lightweight, platform-agnostic form. Unlike VMs, containers do not contain an operating system but instead rely on containerization technologies such as Docker, LXD or rkt to draw resources from their hosts. Containers, therefore, reduce the memory and storage overhead associated with running virtual machines.

Image source: pawseysc

Containers make possible the distribution of more complex packages. If a service requires a database and a message broker, traditionally, developers will need to painstakingly install and set up these dependencies on their local machines. Some time ago, a colleague and I spent a couple of days trying to install MySQL on her MacBook Air and import the data required for a project we were working on together. In the case of a team collaborating on a project, disparity in dependency versioning among developers often leads to subtle errors and unexplainable behaviours when a merge is finally done. With containers, the dependencies of the application and dummy data for the database can be bundled and distributed between teams. This ensures uniformity of dependency versions and eliminates time spent on manual installation. As described in this article by Paystack, containerization can be used as the basis for creating on-demand environments that give developers the leverage to test and experiment without worrying about how it will affect other people’s work.

Containers vs VMs

Are containers a direct replacement for VMs? Not exactly! As this author puts it: “Containers are like tents, while VMs are like houses”. You don’t want to stuff a tent with stuff that should fit into a two-bedroom house. Containers should contain only the runtime dependencies required for your code to function. Containers should not contain binary files or other drivers that interface with the operating system kernel. The more binary files and drivers a container contains, the more likely it is to affect other containers on the same host.

Containers offer isolation only on the software level by virtualizing the operating systems of their host; VMs, on the other hand, offer stronger isolation at the hardware level by virtualizing the hardware resources of the host system because each VM has its own memory, storage, and network interfaces.

VMs are suitable for legacy systems where the operating system and hardware configuration required for their execution are no longer available, while containers, on the other hand, are suited for more modern cloud architectures.

Like with everything else, both containers and VMs are not a one-size-fits-all solution to every problem. There are many workflows for which the portability and flexibility associated with these technologies are not required. As a rule of thumb, if your application does not compete on reliability and flexibility, or if your development team is small and limited in experience, you are better off without these technologies, as they require expertise and specialization to handle them effectively.

The Future

I expect VMs and containers to coexist in the future. As per containers, we can expect to see clever AI software bundled with its modeling tools, databases, and web frontends and distributed to developers around the world.

I think that containers have become important toolkits in software development and are going to further improve and influence how we develop, package, and distribute software. With these considerations in mind, I hope you invest in learning about containers and how to use them efficiently.

Let me know what you think in the comments section. I look forward to hearing from you.